V červenci 2019 jsme byli týden v Oslu a stihli jsem toho spoustu, včetně dvou zajímavých hike za město, tak třeba se vám to bude hodit jako inspirace.

Cesta do Osla

Letěli jsme z Prahy s Norwegian. Tím, že jsme byli docela omezení ve výběru termínu, tak nás nakonec zpáteční letenky (pro oba) stály 8700 Kč. Několik dnů po zabookování nám přišel mail, že zpáteční let ruší a že nás přehodili na jiný den. Pokud je to více než 14 dnů do odletu, tak to mohou klidně udělat. Ale v profilu pak šlo letenky zadarmo přebookovat na jiný termín, takže jsme zpáteční let přehodili na další den, na který ty lety předtím byly drahé. No a jako vždy jsme letěli jen s příručákem. Ani v Praze ani v Oslu velikost zavazadel neměřili.

MHD v Oslu

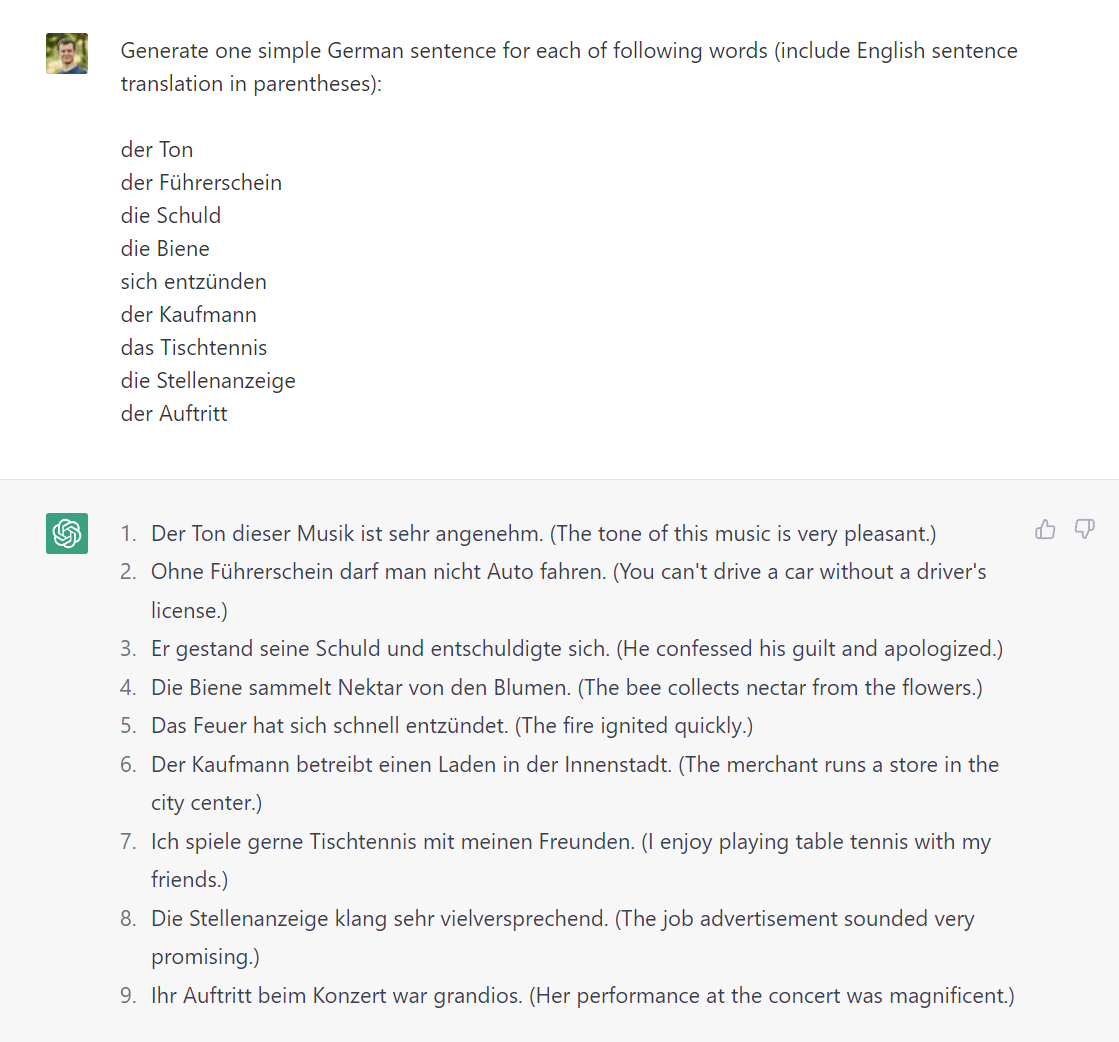

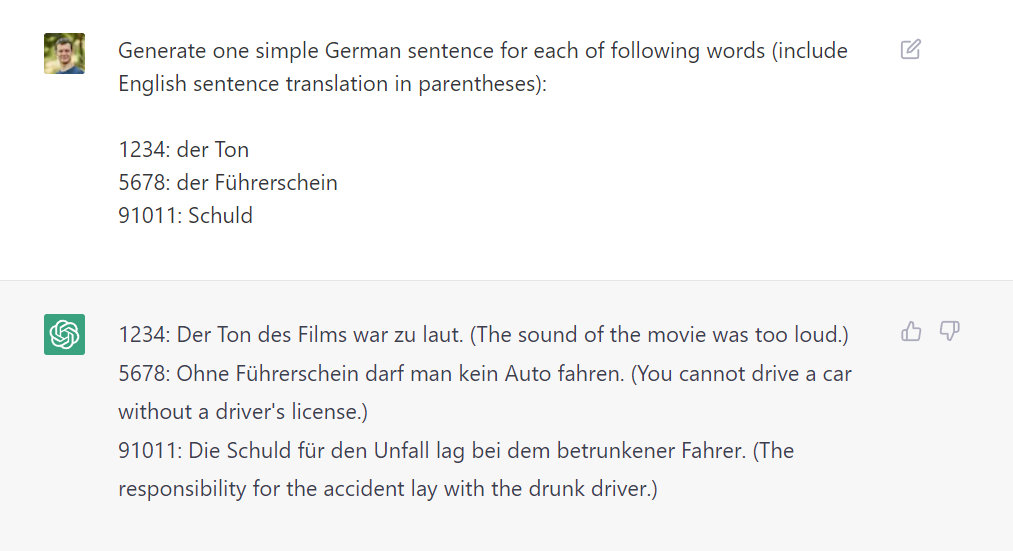

MHD v Oslu provozuje Ruter As a funguje to super. Na jízdenky se používá aplikace RuterBillet, kde si jízdenky přímo kupujete (existuje alternativa s nějakou plastovou kartičkou, to jsme nezkoumali). Koupili jsme si týdenní jízdenky. Výhoda je, že je můžete koupit dopředu a vybrat si čas začátku platnosti a případně je zapnout ručně. Kupodivu nefungovala platba kartou (MasterCard ani VISA, Airbank ani Fio), takže jsme platili PayPalem.

Většina Osla je v zóně 1, takže týdenní jízdenka nám stačila na tu jednu zónu. A na těch několik cest mimo si jde přes aplikaci přikoupit "Extra" na tu konkrétní zónu. Mají to hezky udělané, že místo toho, abyste museli zkoumat, jakou zónu chcete, jen vyberete, kam chcete jet a ono to ukáže, jakou zónu si máte koupit. Ty tickety na extra zónu jsou jen asi na hodinu až dvě, takže jde zase nastavit začátek platnosti a případně to těsně před nástupem do autobusu/lodi/vlaku odstartovat ručně.

Pro hledání spojů buď můžete používat Google Maps nebo jejich aplikaci RuterReise (ta ukazuje i zóny).

Většinu toho zvládnete linkami metra, které kromě centra jezdí po povrchu (historicky to bylo tak, že postupně zapojili samostatné tramvajové tratě do struktury metra).

Cesta z letiště

Z letiště je nejlepší jet vlakem VY. Pozor, na letišti mají mnohem lépe vidět prodej jízdenek do Airport Express Train (Flytoget), který stojí mnohem víc a není o moc rychlejší. Jízdenky na VY vlak si koupíte v automatu vlevo od toho Flytogetu. Nebo ještě lépe - pokud budete už mít dopředu koupenou jízdenku na MHD v RuterBillet, tak si jen přikoupíte zónu na letiště - je to levnější, než celá samostatná jízdenka.

Peníze a nakupování

V Norsku je docela draho, cca 2-3× než v ČR. Vzhledem k tomu, že kurz NOK je 2.7 CZK, tak se dá docela dobře orientovat podle cen, které mají kde napsané - jsou podobné jako v ČR, jen nesedí měna :-) (streetfood 110-150 NOK atd.)

Zajímavost: V Norsku fungují zálohy na PET láhve a plechovky, podobně jako v ČR na láhve od piva. (video)

Všude se dá platit kartou. I když jsme došli lesem na takovou chatu, kde měli suché záchody, tak stejně měli terminál na karty. Stejně tak kiosek na ostrůvku u pláže. A veřejné záchodky v parku mají u dveří také terminál na pípnutí kartou. Takže jsme za celý týden neměli v ruce žádné norské peníze (ale nosili jsme nějaká eura, kdybychom s kartou náhodou někde neuspěli.)

Bydleli jsme na Airbnb, takže jsme si večeře vařili a k obědu jsme měli vždycky svačinu, protože jsme stejně byli někde na výletě. Suroviny v obchodě zas tak drahé nejsou (doporučuji supermarkety Kiwi nebo Rema 1000). A třeba takový chlazený losos stojí stejně jako v ČR ;-)

Když mluvím o bydlení, tak doporučuji bydlet na metru a max pár stanic od centra, ať ušetříte čas.

Elektroauta

V Norsku se prodává víc elektroaut než těch se spalovacím motorem. Je to hlavně kvůli různým úlevám pro EV a naopak ekologické dani z aut se spalovacím motorem (více info). Vzhledem k tomu, že většinu elektřiny (95 %) získávají z vodních elektráren, to zní jako super nápad. Nevýhoda je, že rozvodná síť na to není připravena a budou do ní muset hodně investovat.

A s tím souvisí jedna zajímavost - Ruter (dopravní podnik v Oslu) zkouší na jedné krátké lince provozovat autonomní elektrické minibusy. To jsme samozřejmě museli vyzkoušet :-)

Drøbak a Oscarsborg - výlet lodí

Drøbak je vesnice asi 30km od Osla, kam se dá dojet MHD lodí (pozor, je potřeba přikoupit zóny - viz výše). Když budete stát frontu na trajekt, tak vám bude připadat, že se tam nevejdete - ale ta loď je fakt velká (cca pro 200 lidí), tak se nebojte.

Lodí můžete dojet až do Drøbaku nebo vystoupit o zastávku dříve, na ostrově Oscarsborg, kde je vojenská pevnost. Odtamtud se pak dá do Drøbaku dostat přívozem. Je to dost důležité místo norských dějin, protože v roce 1940 se jim z té pevnosti podařilo potopit německý křižník Blücher a tím zbrzdit invazi Němců do Osla tolik, že norský král a vláda stihl utéci. Doporučuji přečíst článek na Wikipedii: Battle of Drøbak Sound. My jsme tam nebyli, protože jsem to zjistil až potom.

V samotném Drøbaku je zajímavá třeba pláž, Husvikbatteriet - dělo, které se přičinilo o potopení křižníku Blücher. A kousek na kopci Veisvingbatteriet - další děla, tentokrát v lepším stavu a se super výhledem na Oscarsborg.

Zpátky z Drøbaku jsme už jeli autobusem, kterým to je rychlejší, než lodí.

Østmarka hike (16 km)

Oslomarka je označení pro lesnatou a kopcovitou krajinu v okolí Osla, kterou Norové využívají k rekreaci (výlety, kola atd.). My jsme vyrazili do východní části nazvané Østmarka.

Metrem jsme dojeli na konečnou, do Ellingsrudåsen. Tam už kousek od východu z metra (který je vyražený ve skále!) začíná turistická trasa. Nejprve jsme šli kousek po hlavní turistické, která je zároveň cyklostezka, ale pak jsme odbočili směrem na Haukåsen. Většinou se nám podobné pokusy - třeba vylézt na nějaký vysoký kopec v okolí - odmění fajn zážitkem. Cestou jsme si udělali malinkou odbočku k jezeru Svartputten a pokračovali jsme se na Haukåsen. Ten je zajímavý zejména tím, že tam je umístěný radar.

Odtamtud jsme došli na chatu Mariholtet, kde se dá občerstvit. Co nás hodně překvapilo bylo, že si místní nedávají pivo, ale kávu nebo colu. To by se v Čechách nestalo :-)

Dál jsme pokračovali po červené a pak po západní straně jezera Lutvann, odkud byl super výhled. Nakonec jsme došli na metro do Haugeurd a jeli domů.

Mrkněte na celou trasu na Wikiloc.

Výhoda chození na výlety v Norsku v létě je, že vám díky pozdnímu soumraku nehrozí, že byste se do tmy nestihli vrátit.

Holmenkolen

Holmenkollen je známý skokanský můstek, kde se konají různé důležité závody (nejen) ve skocích na lyžích. První můstek tam byl postaven koncem 19. století a zajímavé je, že ho jednou za čas zbourají a pak postaví nový a větší (naposledy v roce 2010).

Metrem dojedete do stanice Holmenkollen a odtamtud dojdete kousek k můstku. Součástí areálu je muzeum, které popisuje historii samotného můstku, ale i polárních výprav. V ceně vstupenky je i výstup (resp. vyvezení se výtahem) na vršek můstku, odkud je super výhled. Doporučuji jít nejdříve vystát tu frontu na výtah, čím tam dorazíte později, tím je větší. Ale muzeum určitě nevynechejte (můžete si ho projít až po návratu z vyhlídky).

Kromě výhledu nabízí horní plošina možnost sjet si dolů na zipline - ale mě to teda přišlo dost vysoko :-) (na fotce jsou vidět ta ocelová lana)

Hike přes Vettakollen a Ullevålseter k jezeru Sognsvann (15 km)

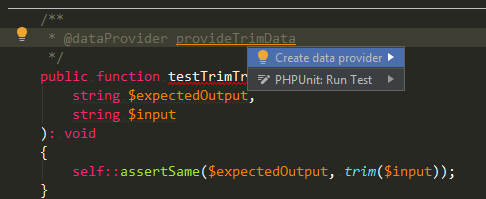

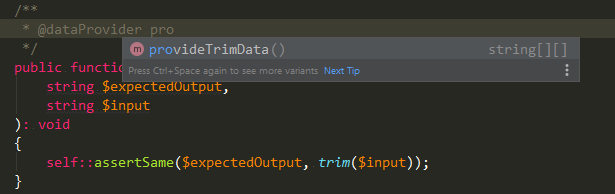

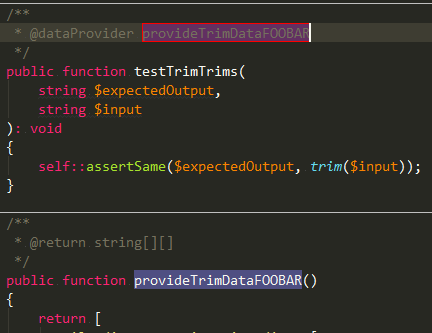

Z Holmenkollen jsme popojeli pár stanic na zastávku Vettakollen, odkud jsme vyrazili na další hike.

První část cesty je trochu do kopce, ale brzy přijde odměna v podobě super výhledu na Oslo (je to kupodivu dříve, než samotný vrchol Vettakollen - odtamtud není vidět nic).

Pak pokračujete lesem, cestou občas potkáte nějaké jezero, jezírko nebo mokřad. Nejvzdálenějším bodem je Ullevålseter, což je taková horská chata, u které se potkávají různé turistické a cyklo trasy. I když to je uprostřed ničeho, tak samozřejmě jde platit kartou. A pozor, podle webu mají v pondělí zavřeno, tak ať vás to nepřekvapí. Dali jsme si tak ke svačině kávu, horkou čokoládu a něco sladkého.

Cestou zpět jsme šli kolem dvou velkých jezer, a nakonec jsme došli k jezeru Sognsvann. To je kousek od města, takže tam spoustu místních chodí běhat.

Celá trasa opět na Wikiloc.

Grilování, jednorázové grily

Možná to bylo tím, že jsme v Oslu měli dobré počasí, ale mezi místními je hodně populární grilování na jednorázových grilech (takové ten alobalový tácek naplněný uhlím). Grilují všude možně, třeba právě u toho jezera Sognsvann.

Hodně zajímavé je, že tomu museli přizpůsobit infrastrukturu. Na místech, která se hodí na grilování, mají kromě obyčejných košů také speciální kovový koš na vyhazování těch jednorázových grilů!

Káva

Na kávu jsme nikam moc nechodili, takže mám jen jeden tip na kavárnu - Espresso House.

Na Airbnb byl Nespresso kávovar, takže jsme si kávu vařili doma. S tím souvisí zajímavost - jak jsem psal výše, tak všechno je v Norsku 2-3× dražší, ale Nespresso kapsle stojí v přepočtu stejně jako v ČR (NOK 3,89 vs CZK 9,90). Pro kapsle jsme se stavili v Nespresso obchodě na jejich "Pařížské".

Oslo - ostrovy Langøyene a Gressholmen

Kromě delší cesty lodí do Drøbaku, o které jsem psal výše, můžete v Oslu vyrazit MHD lodí na ostrovy, které jsou kousek za městem (a zase platí, že ta loď vypadá malá, ale vejde se tam spoustu lidí, takže nezůstanete na břehu ani když tam bude velká fronta).

My jsme nejdříve vyrazili na Langøyene, kde je fajn pláž. Kromě toho to je jediný z ostrovů, na kterém se může stanovat. Kousek od pláže je kiosek, který samozřejmě přijímá platební karty.

Odtamtud jsme přejeli na ostrov Gressholmen. Ten je mnohem více zarostlý, ale taky je na něm několik pláží. A zajímavostí je, že na něm kdysi bylo první norské letiště (pro hydroplány).

Oslo

A teď zajímavé věci v samotném Oslu.

Ekebergparken

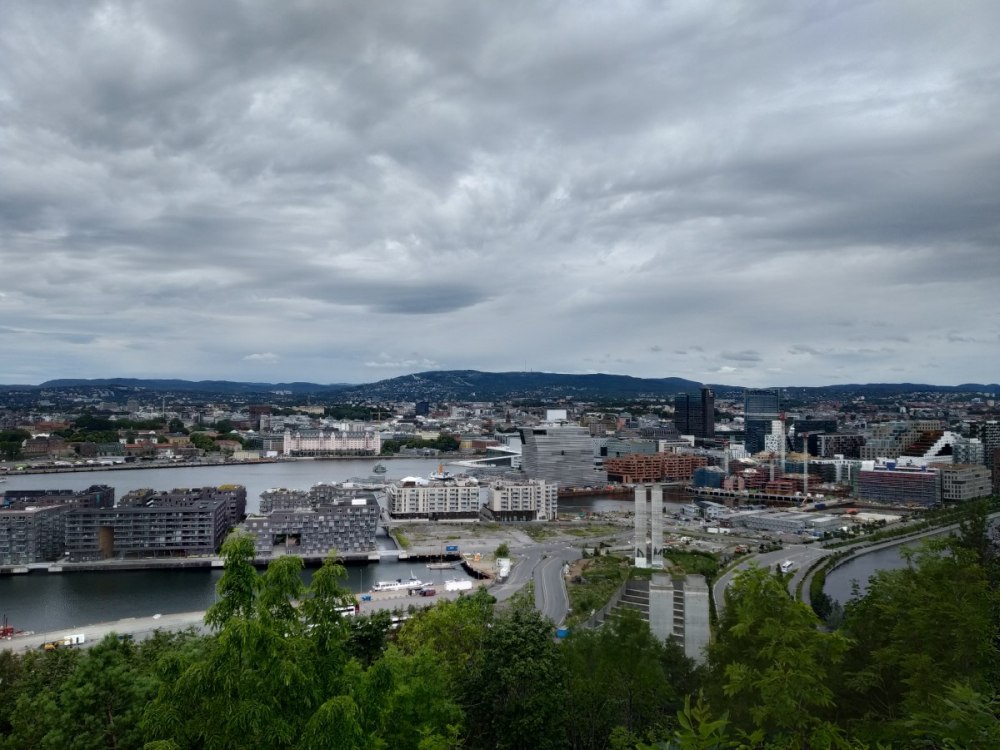

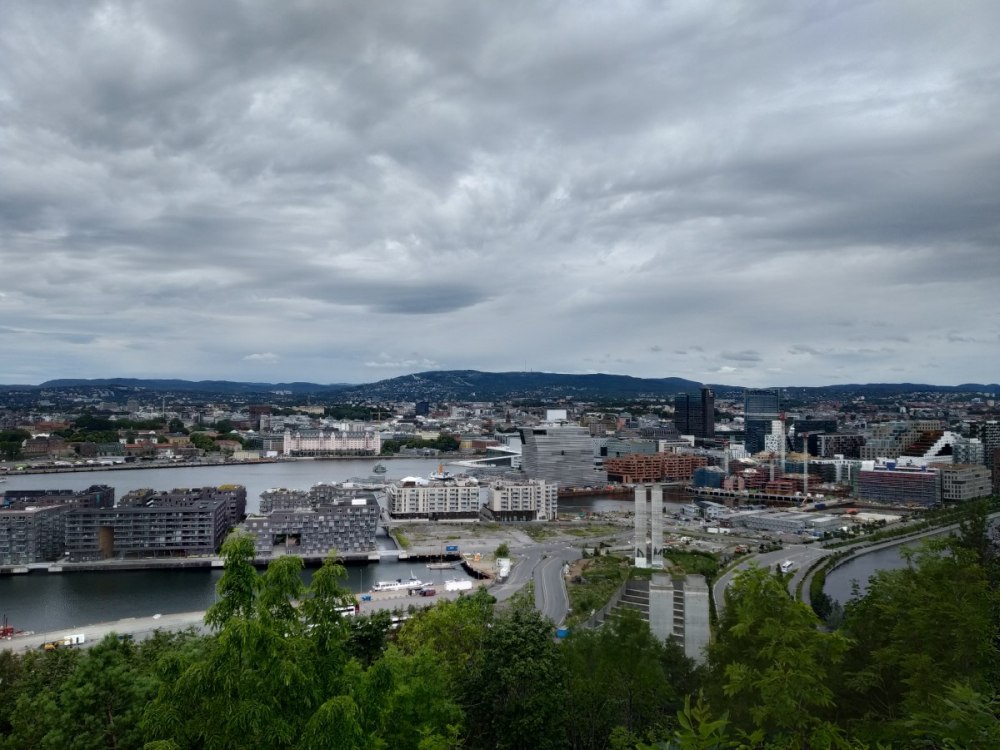

Ekebergparken je kopec s parkem na kraji Osla. Nejlepší je dojet tramvají přímo do zastávky Ekebergparken a odtamtud se projít po parku, prohlédnout si sochy a dojít na vyhlídku, odkud je vidět kontrast starých a nových budov v Oslu. Odtamtud se pak dá po cestičce sejít dolů do centra.

Opera

Operahuset je zajímavé zejména tím, že je možné vyjít až na střechu, odkud je fajn výhled.

Pevnost Akershus

Určitě doporučuji nevynechat pevnost Akershus - kromě historických budov je odtamtud hezký výhled na přístav.

Norsk Folkemuseum

Do Norsk Folkemuseum postupně přemístili různé budovy, aby mohli ukázat, jak v minulosti žili lidé (nejen) na norském venkově. Areál si buď můžete projít sami nebo se připojit k některé z komentovaných prohlídek (doporučuji!). Nejvýznamnější památkou v muzeu je kostel přibližně z roku 1200, který koncem 19. století nechal král přestěhovat tam, kde je teď.

A jedna zajímavost, kterou jsme se tam dozvěděli. Určitě znáte takové ty domky se střechami porostlými trávou (a teď nemyslím moderní kancelářské budovy). Vždycky jsem myslel, že ta tráva tam má nějaký účel, ale ono ne. Jen holt tenkrát dávali na střechu vrstvu hlíny jako izolaci a shodou okolností z ní pak rostla tráva.

Playa de Huk

Když už kvůli Folkemuseum (nebo jinému muzeu) budete na poloostrově Bygdøy, tak si vezměte plavky a běžte se vykoupat na Playa de Huk. My jsme měli štěstí na počasí - bylo 28°C, takže pláž byla narvaná :-)

Královský palác a zahrada

Když už budete v Oslu, tak se určitě zajděte podívat na královský palác a do jeho zahrad.

Frognerparken

Velký park, jedno z více turistických míst. Zejména kvůli zajímavé fontáně obklopené turisty a sloupovému sousoší.

Závěrem

V Oslu a jeho okolí toho je spoustu k vidění. Určitě doporučuji zařadit nějaké výlety za město než jen chodit mezi budovami.

]]>